Table of Contents

Origin of Deepfakes 👟

Deepfakes (or deepfaking) originated in the 1990s, when academics attempted to produce realistic representations of humans using CGI. The technology gained popularity in the 2010s, when massive datasets, breakthroughs in machine learning, and the power of new computing resources resulted in significant advances in the field.

Deepfakes first appeared in 2017 when a Reddit user posted fake videos that put celebrities’ faces into adult scenes. The technology behind it, called deep learning and GANs (a type of AI), had been developing for a while, but this was the first time it caught people’s attention. At first, deepfakes were mainly used in small online groups, but as the tools became easier to access, they started spreading fast.

People soon began using them to make fake videos of politicians, like Barack Obama and Donald Trump, raising concerns about fake news. Over time, deepfakes went from harmless jokes to serious threats, like scams and harmful attacks.

What Are Deepfakes? 🪄

Deepfakes are digitally modified material in which one person’s likeness is convincingly replaced by another’s.

This may be accomplished using a number of techniques such as face-swapping, lip-syncing, and body doubling to produce realistic-looking movies and photographs of individuals doing or saying things they never did or said.

Deepfake Image showing former US president Donald Trump Being arrested.

How Are Deepfakes Created? 🧑💻

Deepfakes are films and images that have not been manipulated or photoshopped. In fact, they are made with sophisticated algorithms that combine previous and fresh material. For example, machine learning (ML) is used to identify minor face traits in photographs and change them in the context of other movies.

Deep learning is a technology used to construct deepfakes. Artificial neural networks are used in deep learning, a kind of machine learning, to extract knowledge from data.

The first step in the process of generating a deepfake involves compiling many images, videos or audio clips that feature the person you wish to impersonate. For example The expression data used the more well fake will be This data is then passed through AI algorithms, typically a species of GANs (Generative Adversarial Networks). Basically, one part of AI generates fake content and the other checks for defects until it looks believable.

When it comes to videos, the software replaces an individual’s face or voice with another one and can even adjust its expressions as well as mouth movements. While in the past these tools were relatively hard to use, we now have apps and websites that require little tech knowledge but still allow for creation of deepfakes with only a few clicks.

Nowadays, virtually anyone with an internet connection can create a deepfake. Things that once required detailed knowledge of coding and expensive software are now within reach via free apps and websites. There are software that can swap faces in a video with one click or create fake voices, which have stepped-by-step tutorial online for people to copy.

Though some people may be using this for fun like posting face video with movie but not all the uses are positive, it can also support negative things such creating scam or manufacturing fake narratives. The ready availability of these tools has increased the difficulty in controlling how deepfakes are employed, leading to concerns around privacy, trust and safety.

Positive Uses of Deepfake Technology 👍

Though there are deep concerns about the rise of a misleading or malicious video landscape, this technology is also being used to push new toolkit capabilities across many segments. Deepfakes in entertainment, education and access Deepfakes are also useful beyond mere manipulation or deceit.

Through AI, the capacity to generate hyper-realistic images, videos and voices exists that can expand creativity in new ways never thought possible before.

📌 Entertainment (movies, visual effects, voice cloning for actors)

The tools for deepfake technology are a game-changer in the realm of entertainment as well. It makes it so film-makers can create convincing special effect without the need for expensive re-shoots or heavy make-up. Actors can, for example, be made to look younger or alive again in scenes after death.

Voice cloning allows actors who cannot perform due to scheduling conflicts or health problems because AI-generated voices might be made that mimic the voice of such an actor. When done right, they lead to believable movie magic that also helps give directors and producers more range in their storytelling.

📌 Education (virtual avatars or digital restoration of historical figures)

Surprisingly, deepfakes have found quite a few positive applications in education. It envisions a history class where students, live and in real-time during the lesson or as part of an assignment streamed from home later on after hours because there are multiple ways to utilize this feature at different levels of immersement can ask questions directly to Albert Einstein or Queen Cleopatra.

Using AI and deepfake technology, educators could create digital avatars of these figures that Human proved to be almost impossible for students not to relate. Deepfakes are being harnessed by museums and documentaries to repair old footage or revive lost voices, providing a more immersive learning environment. These are the tools that make it possible to bring fun and engaging face-to-face with history or complex topics.

📌 Accessibility (voice synthesis for individuals with disabilities)

The point is that deepfakes could be an absolutely essential tool for communicating from instrumentalities to individuals with disabilities. As in voice synthesis that helps people unable to talk due to conditions such as ALS or throat cancer by making them speak using the AI-generated voices sounding nearly like their own.

This will help preserve who they are and their bond with family members. Moreover, digital and facial technology could help people with mobility disorder as well by enabling realistic avatars that can emote or speak for them. We can use deepfakes for accessibility to overcome obstacles people face and improve their lives.

Threats and Dangers of Deepfakes 💀

Deepfakes pose many more risks than just jeopardizing democratic processes. The following are a few ways that technology might be used maliciously:

- Identity Theft and Fraud: Deepfakes could help someone craft fake IDs, passports or other documents to create identity theft and fraud And, they can be used to catfish someone online or in person which leads into identity theft and other monetary scams. Some illustrative possibilities are deepfake recordings of a person to apply for loans or open bank accounts on their behalf, possibly causing enormous economic harm.

- Harassment: Deepfakes can be used to generate sexually explicit, violent or otherwise abusive material. Videos like this can be used to harass, intimidate and abuse others (particularly women / marginalized groups)

- Defamation: Deepfakes can also be used to generate fake videos or images showing that the person was doing something which never actually did and spoil their character as well as professional career. Next, is to already be harmful for public figures politicians And celebrities This would allow hackers to produce deepfake videos of politicians appearing as though they had endorsed a contentious political policy, or were even seen committing crimes that could hurt the candidate’s reputation and their running campaign.

- Misinformation: The use of deep fakes to propogate fabrication information, external propaganda that may or many not be linked as fake news used in order to manipulate political processes and elections. Deepfakes can be used to influence opinion in local communities, or even whole countries, by creating doctored videos of companies and political leaders saying things they never have done before.

How to spot Deepfakes 🎯

Although the technology gets better, deepfakes can be detected using a handful of tools such as by analyzing microexpressions and beliefs. It is one of the simplest ways to spot a deepfake as you can identify minute facial details. Fake videos will often have the actors make jerking movements with their eyes that can be out of place, as well an odd lack of blinking. The corners of the face may also look fuzzy or have an artificial light relative to other parts of the frame.

The lighting in the environment looks off, casts shadows wrong or creates reflections that are not where they should be Sometimes the quality of deepfake audio can be another give away—some squawk up to two octaves higher than a human voice while others just sound flat or have strange accents and lip sync discrepancies. Newer stories have been framed more recently: For example, videos of Tom Hanks endorsing a dental plan alienated people who noticed his mouth did not move quite right.

Even if you are less vigilant with deepfakes one thing which will give it away is the inconsistency in behavior or context. There are countless deepfake videos where politicians or celebrities say something they would never actually dream of saying, and most often in ways that do not advance any real-life narrative timely or believable. Your organization can investigate by verifying the source of the original video or audio clip before giving any authenticity.

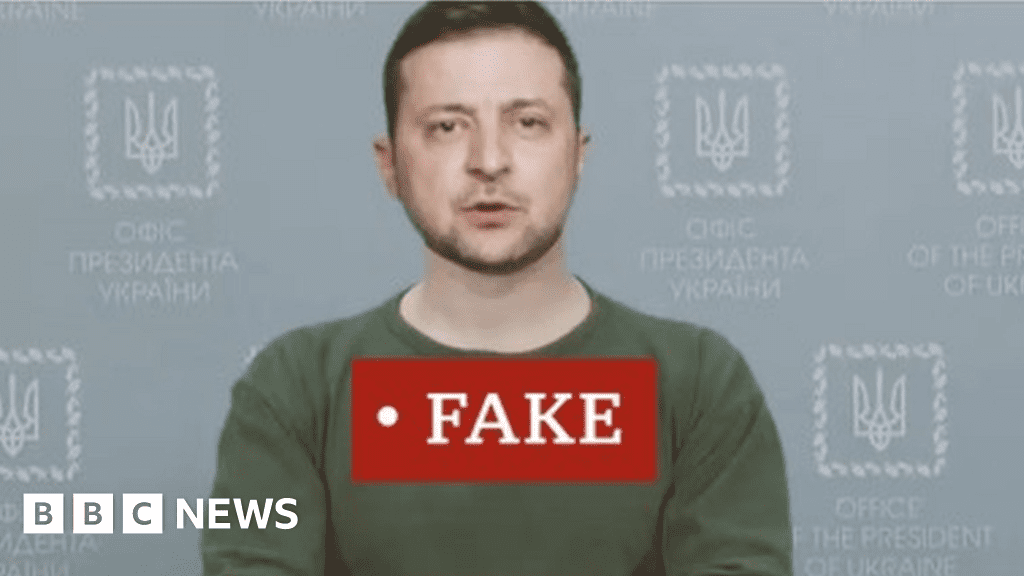

While processing, some social media platforms may be detecting deepfakes and flagging them as such; however not all detections are instantaneous. One recent instance included a deepfake of Ukrainian President Volodymyr Zelensky urging his troops to lay down their weapons — it was instantly discredited because the statement clashed against what he has in fact proclaimed. It is, therefore, essential to critically look into the source and context before believing in it or using AI-powered deepfake detection tools provided by various sites that can determine your photos if manipulated.

Few more methods in point wise:

- unusual or uncomfortable face alignment.

- unnatural facial or bodily movement.

- Unnatural hues.

- Videos that seem strange upon magnification or zoom-in.

- Inconsistent sounds.

- Individuals devoid of blinks.

- minute variations in the subject’s reflected light in his eyes.

- The aging of the hair and eyes fits the aging of the skin.

- Glasses either have no glare or too much; the glare angle remains the same independent of the person’s motion.

- Spelling mistakes.

- Natural flow of sentences is absent here.

- Email addresses from suspicious sources.

- phrasing unlike the intended sender.

- Messages out of context unrelated to any debate, event, or problem.

But with tools supporting natural blinking and other biometric evidence, artificial intelligence is progressively surpassing some of these benchmarks.

Technology required to develop Deepfakes ⚙️

The development of deepfakes is becoming easier, more accurate and more prevalent as the following technologies are developed and enhanced:

All deepfake content is developed using GAN neural network technology using generator and discriminator algorithms.

Visual data is analyzed with the use of convolutional neural networks. Facial recognition and movement tracking are done with CNNs.

A neural network technology called autoencoders identifies the attributes (facial expressions and body movements) of the target and forces these attributes on to the source video.

Deepfake audio is created using natural language processing. NLP in the sense that it is used in the system, are a group of algorithms that are involved in the analysis of a target’s speech attributes, and then produce original text with those attributes.

Notable Deepfake Cases 🎭

A TikTok account is solely dedicated to posting deepfakes of Tom Cruise. Although @deeptomcruise‘s videos still contain a hint of the uncanny valley, his mastery of the actor’s voice and mannerisms, in conjunction with the use of swiftly advancing technology, has yielded some of the most convincing deepfake examples.

In early 2024, authorities in Hong Kong asserted that a finance employee of a multinational organisation was deceived into transferring $25 million to con artists who were impersonating the business’s chief financial officer during video conference sessions, utilising deepfake technology. The employee was allegedly deceived into participating in a video call with a number of other employees, all of whom were deepfake impersonators, according to the police. More details.

Mark Zuckerberg, the inventor of Facebook, was the target of a deepfake in 2019, which depicted him arrogantly asserting that Facebook “owns” its users. The video was intended to demonstrate how individuals can deceive the public by utilising social media platforms like Facebook.

In 2020, concerns were expressed regarding the potential to interfere with elections and election propaganda. There were numerous deepfakes that depicted U.S. President Joe Biden in exaggerated states of cognitive decline in order to influence the presidential election. More details

Presidents Barack Obama and Donald Trump have also been the targets of deepfake videos, which are intended to disseminate disinformation and may also serve as satire and entertainment. In 2022, a video was released depicting Ukrainian President Volodymyr Zelenskyy instructing his forces to surrender to the Russians during the Russian invasion of Ukraine.

The Legal and Ethical Implications ⚖️

Deepfakes are a complicated legal and ethical issue that is moving fast. This fast growing technology is too fast for many countries on the legal side. But for the moment, there are very few laws dealing with deepfakes overall — with those that exist typically aimed at specific harms, like non-consensual deepfake pornography or election interference.

Take for example some U.S. states which already passed laws to hold responsible makers of harmful deepfakes, especially when such deepfakes are used to undermine somebody’s reputation or to commit fraud. But that’s tricky to enforce because it’s so difficult to trace creators — especially when videos are shared anonymously online. Moreover, the themes of free speech arise, in particular, when the distinction between satire, parody, and malicious deepfakes is hazy.

From an ethical point of view, deepfakes conceptualize trust, privacy and how technology can be used badly. Manipulating videos and audio to make people say or do things they didn’t do presents the potential for very serious harm. With deepfakes, anyone can easily become the person they are mimicking, spreading false information, ruining reputations or fooling public when they are least guarded.

This is also basically the problem of consent, since it’s so easy to violate that right when someone’s image or voice is used without their permission and in hurtful ways. For more benign uses, of course, some contend that creating deepfakes of real people, often without their knowledge, isn’t noble. With this technology becoming more advanced, society will begin to have to find a balance between innovation and liability against individuals for their harm both legally and ethically.

Most people don’t know about deepfakes, their uses, or the risks that come with them, so there aren’t any rules against them. In most situations of deepfakes, this means that victims don’t have any legal protection.

That being said, there have been recent attempts to pass laws that, if approved, would make very bad deepfakes illegal and allow people to be sued for them. Among these are the following important government actions:

- “Defiance Act” If the DEFIANCE Act is approved, it will be the first federal law that specifically protects individuals from deepfakes, which are images that have been intentionally manipulated or altered without their consent. The producers of the deepfake can be held legally liable if they were aware of, and wilfully chose to ignore, the fact that the victim did not provide their consent for its creation.

- A law to stop the use of sexually explicit deepfakes. The Preventing Deepfakes of Intimate Images Act was presented by Congressman Joe Morelle in May 2023. To combat the illegal production and dissemination of sexually explicit photos altered digitally, this measure would make it a crime to share such photos without the subject’s consent.

- The Take It Down Act. The Take It Down Act, introduced by Senator Ted Cruz, would make it a crime to publish or threaten to publish revenge porn. It would address known exploitation by immobilising technological deepfakes on websites and networks. Additionally, after receiving a legitimate request from a victim, social media networks must implement a procedure to delete the manipulated photographs no later than 48 hours.

- The Deepfakes Accountability Act. It is now illegal to fail to detect harmful deepfakes, such as those portraying sexual content, criminal behaviour, incitement of violence, or foreign interference in an election; in September 2023, Congresswoman Yvette Clarke and Congressman Glenn Ivey introduced the Deepfakes Accountability Act, which would make this a crime and require creators to digitally watermark deepfake content.

Countermeasures and Solutions 🚨

Technology, awareness, and caution are the things needed to defend against deepfakes. But one of the most effective ways is to use AI based detection tools that can detect manipulation in videos, audio and images. That’s where companies and researchers are creating sophisticated algorithms that can spot deepfakes by looking for pixel inconsistencies, unnatural facial movements or irregularities in a pattern of audio.

These technologies are also being adopted by the news outlets and social media platforms to identify and take away deepfake content before it would flood the entire social or digital media. However, since there aren’t perfect yet, the people should be alert and not trust a suspicious content.

A more personal level is dependent on being educated yourself and others of what deepfakes are and how to prevent them. Knowing what signs of a deepfake are common—the sort of bad glitches with the face, an odd amount of lighting, and a mismatched audio—make spotting fakes easier. Ask yourself for the source of content and cross check with trusted media before sharing anything questionable.

Regulations and policies are also flying in from governments and institutions eager to stop the misuse of deepfakes, from tougher penalties for using them for fraud or misinformation. Stay informed, use the tools available to you, and take care about what we consume and share online so that together we can work to minimize the risks deepfakes carry.

The Future of Deepfakes 🌵

While the future of deepfakes is exciting and a source of growing concern. According to tech, deepfakes are only getting better and harder to detect as the technology advances. Deepfakes, instead, have been sparking interest in a number of industries including marketing and entertainment, where these forms of deepfakes can help provide personalized ads or otherwise engaging digital experiences. With that potential comes the risk of misuse, however.

Deepfakes are expected to be used more by both cybercriminals and the purveyors of misinformation in future geopolitical conflicts. DeeFakes have been used recently to pretend the CEO in corporate scams, where they trick employees into transferring large sums of money. And as these kinds of technologies get more sophisticated, I suspect we’ll see more interesting uses and more interesting threats.

At the same time, there are efforts to fight the menace of the deepfakes. Companies such as Microsoft and Adobe are creating AI tools that can flag manipulated content, and researchers now are bringing digital watermarks into the mix, to prove authenticity. And governments around the world are just beginning to pass laws and regulations to counteract this, because as election after election is at risk of being influenced by fake videos or audio.

As a concrete example, the yet to be released European Union’s upcoming AI Act seeks to provide guidelines on how AI should be used responsibly, including provisions for dealing with deepfakes. The key will be that as we move forward, creative ways to apply deepfakes in innovative fashion, while still using effective ways to prevent harm and maintain trust in digital content.

Final thoughts 🧠

Deepfakes pose a growing threat to individuals, society, and democratic processes. Their ability to make movies of people saying or doing things they never did that look real and often convince people that they did it has huge effects that need our attention and action.

We can mitigate their negative effects and make sure that this technology is used for good reasons by being aware of the bad things that could happen and taking steps to fix them.

FAQ 💡

What are deepfakes, and how do they work?

Deepfakes are synthetic media created using artificial intelligence (AI) and machine learning to manipulate images, videos, or audio. They work by training algorithms on large datasets to replicate a person’s appearance or voice, making the fake content appear real.

Why are deepfakes considered dangerous?

Deepfakes can be used for malicious purposes, such as spreading misinformation, creating fake news, committing fraud, or damaging reputations. Their realistic nature makes it difficult to distinguish between real and fake content, posing significant ethical and security risks.

How can I spot a deepfake?

Spotting deepfakes involves looking for inconsistencies such as unnatural facial movements, poor lip-syncing, odd lighting, or blurry edges. Advanced detection tools and AI-based software are also being developed to identify deepfake content.

What industries are most affected by deepfakes?

Industries like entertainment, politics, journalism, and cybersecurity are heavily impacted by deepfakes. They can be used to create fake celebrity videos, manipulate political speeches, or spread false information, causing widespread harm.

Are there any positive uses for deepfake technology?

Yes, deepfake technology has positive applications, such as in filmmaking for special effects, education for creating realistic simulations, and healthcare for medical training. However, ethical guidelines are crucial to prevent misuse.

What steps can I take to protect myself from deepfakes?

To protect yourself, verify the source of suspicious content, use reputable fact-checking tools, and stay informed about deepfake detection techniques. Additionally, support policies and technologies aimed at combating deepfake misuse.

References 🔗

- https://www.brookings.edu/research/the-ethics-of-deepfakes/

- https://www.technologyreview.com/2021/07/12/1028149/deepfake-detection-challenges-opportunities/

- https://www.wired.com/story/how-to-spot-a-deepfake/

- https://www.cser.ac.uk/research/malicious-use-of-artificial-intelligence/

- https://misinforeview.hks.harvard.edu/article/deepfakes-and-disinformation-exploring-the-impact-of-synthetic-media-on-society/