Table of Contents

What is Containerization? 📦

Containerization is a way of packaging and delivering software programs that enables them to function reliably and consistently across several computer environments. A container is a small, self-contained executable package that contains everything required to run the software, including code, dependencies, libraries, and configuration files.

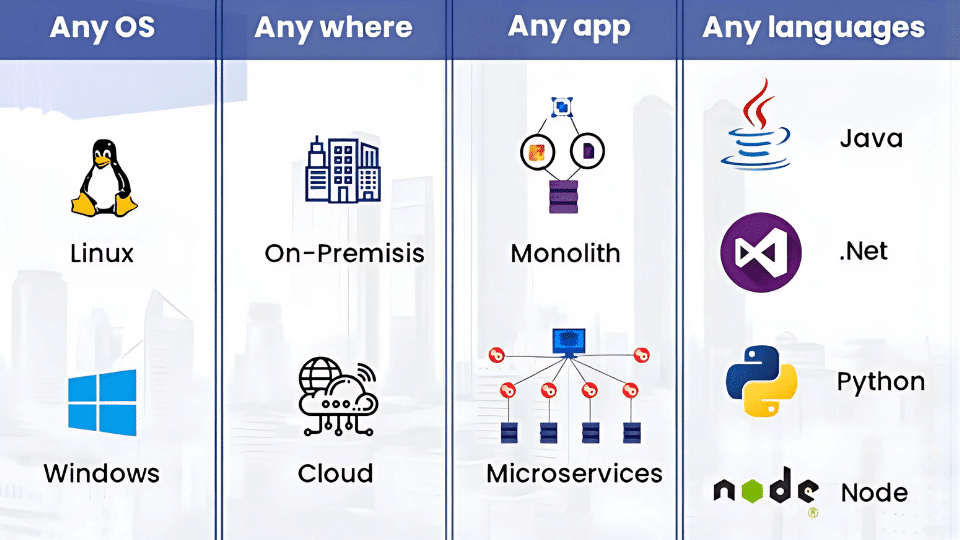

Containers are meant to be portable and deployable across several operating systems and cloud platforms, resulting in a uniform runtime environment. They also insulate the application from the underlying infrastructure, resulting in increased security, flexibility, and scalability.

Containerisation is widely utilised in current software development and deployment techniques, especially with technologies such as Docker and Kubernetes, which have transformed how applications are produced, deployed, and maintained.

History of Containerization 🧩

In 2000 FreeBSD developer Jordan Hubbard creates the “FreeBSD Jail,” an early form of containerization technology.

Google adds cgroups (control groups) to the Linux kernel in 2008, giving resource segregation and restricting features that would eventually become the cornerstone of containerisation.

In 2013 Docker was released by Solomon Hykes as an open-source project, making it easier to create, deploy, and run applications using containers.

In 2015, Kubernetes, an open-source container orchestration technology originally created by Google, has been published, allowing for automated deployment, scaling, and administration of containerized applications.

In 2018, Microsoft has released Windows Server 2019 with built-in support for Windows containers, bringing containerization capabilities to the Windows environment.

2020, Docker introduces Docker Desktop with WSL 2, allowing developers to build, ship, and run containerized applications seamlessly on Windows 10.

Containerization is constantly evolving, with continued breakthroughs in security, scalability, and portability, as well as increased acceptance of serverless container technologies such as AWS Fargate and Azure Container Instance.

What problems does Containers try solve ⁉️

The Most common dialogue you might have heard of is IT WORKS ON MY COMPUTER

Let’s say you made a complex application which you want to show to your friend you copy all the files to your pen drive take it your friends home and let me guess it didn’t work.

The list of problems can be very long. that would have caused that problem. Example Different Node Version. This is what Docker solves.

Environment Consistency

Containers create a consistent environment for development, testing, and deployment. Containers encapsulate everything required to operate an application, so developers can be certain that their code will act consistently in any environment where Docker (or other comparable engines) are installed.

Dependency Management

Containers address the problem of dependency management by enclosing applications into containers. This implies that all of an application’s dependencies are bundled alongside the application, maintaining consistency across contexts.

Isolation

Containers offer process isolation, which enables many programs to execute separately on the same host without interfering with one another. This isolation guarantees that modifications made to one program do not affect others, hence improving system stability and security.

Portability

Containers are lightweight and portable, making it simple to deploy applications across several infrastructure settings, such as on-premises servers, cloud platforms, and developer laptops. This portability streamlines the process of migrating programs across development, testing, and production environments.

Scalability

Containers simplify application scaling by allowing developers to instantly spin up more containers as needed. Docker’s orchestration technologies, such as Docker Swarm and Kubernetes, make administering large-scale containerized applications considerably easier.

Resource Efficiency

Containers share the host system’s kernel, resulting in lower resource overhead than typical virtual machines. This efficiency helps enterprises to maximize resource use and reduce infrastructure expenditures.

How Does Containerization Work? ⚙️

Containerization is the process of constructing software containers that can run consistently and independently of the hardware on which they are placed. The software industry creates and distributes container images or files, which include all of the code and data necessary to run a containerized application.

Developers utilize containerization technologies to build container images that meet the Open Container Initiative (OCI) image criteria. The Open Container Initiative (OCI) is a community-led initiative to standardize the container image generation process. The computer cannot alter images saved in a container.

- Infrastructure: The container model’s hardware layer is known as “infrastructure.” The real system or bare-metal server is hosting the containerized software.

- OS of the System: The operating system (OS) is the second layer in the containerization architecture. To containerize local computers, Linux is commonly utilized. Developers in the cloud computing sector use cloud services to install and manage containerized applications.

- Container Engine: The container engine, often known as the container runtime, is the software that executes container images and creates the containers themselves. It acts as a bridge between containers and the operating system, supplying and monitoring the resources that the program demands. Container engines, for example, allow you to manage several containers on the same OS while keeping them separate from the underlying infrastructure and one another.

- Code and Its Supporting Dependencies: The application code and supporting files, such as library dependencies and configuration files, comprise the containerization architecture’s top layer. This layer may also include a lightweight guest OS that runs on top of the host OS.

Containerization vs traditional virtualization

Comparison to classical virtualization Containerization and traditional virtualization are two methods for creating distinct environments in which to run software programs. Nonetheless, they differ in their core design, resource management, and functional characteristics. This section compares containerization to classical virtualization, highlighting their differences and distinct advantages.

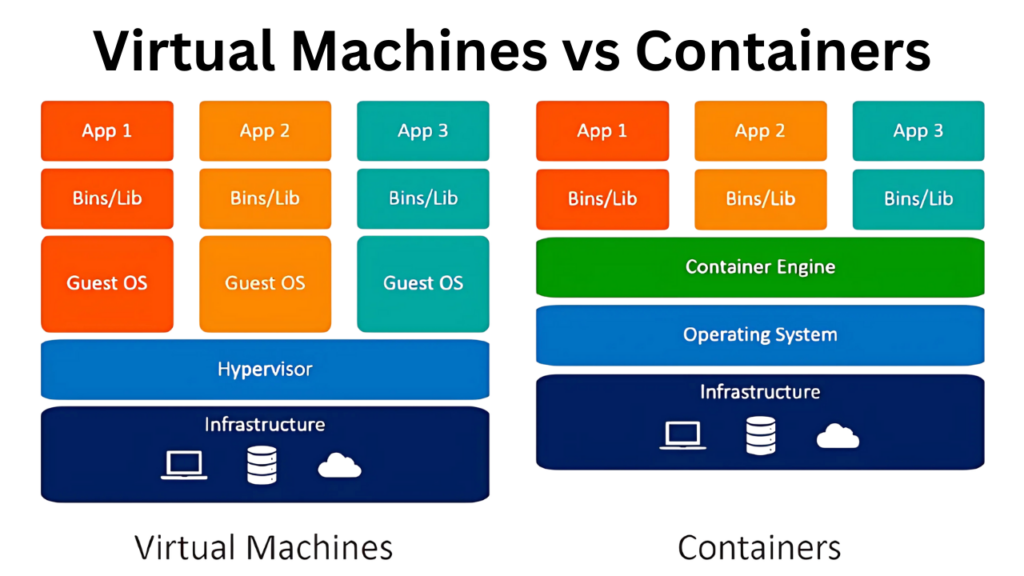

Conventional virtualization uses a hypervisor to create and manage many virtual machines (VMs) on a single physical host. Each VM contains its own guest operating system, runtime, libraries, and application code. This method provides strong isolation between VMs but has a significant expense owing to recurrent OS instances. Containerization, on the other hand, makes use of OS-level virtualization, allowing several containers to run on a single OS kernel while maintaining separate user areas. This solution eliminates the need for several OS instances, dramatically reducing overhead and increasing resource efficiency.

Containers outperform VMs in terms of lightweight and resource efficiency, as they eliminate the need for separate OS instances for each application. The common OS kernel and streamlined architecture enable containers to use fewer resources, resulting in better utilization of the base hardware. Furthermore, containers boot up quickly, generally in seconds or milliseconds, as opposed to virtual machines, which might take minutes.

Containers outperform virtual machines in terms of portability because they encapsulate the application and its dependencies into a single, self-contained entity that can work consistently across a variety of settings. This eliminates the need to create and manage separate environments for development, testing, and production, resulting in a more efficient and simplified development and deployment pipeline. Virtual machines, on the other hand, frequently face portability challenges due to their reliance on certain hypervisors and hardware configurations.

Containerization enables greater horizontal scaling since containers may easily be duplicated and distributed across many hosts to handle rising load. Containers’ lightweight design and quick boot-up periods allow for rapid scalability of applications in response to variable demand. VMs, with their longer boot times and higher resource requirements, are less amenable to quick scaling and can be more difficult to manage in large-scale installations.

Containerization has grown significantly in recent years, resulting in a vibrant ecosystem of tools and services that enable container administration, orchestration, monitoring, and security. While traditional virtualization has an established ecosystem, containerization’s growing popularity has fueled more innovation and development in the container domain.

How Containers Revolutionize Development 🧬

◾Microservices Architecture

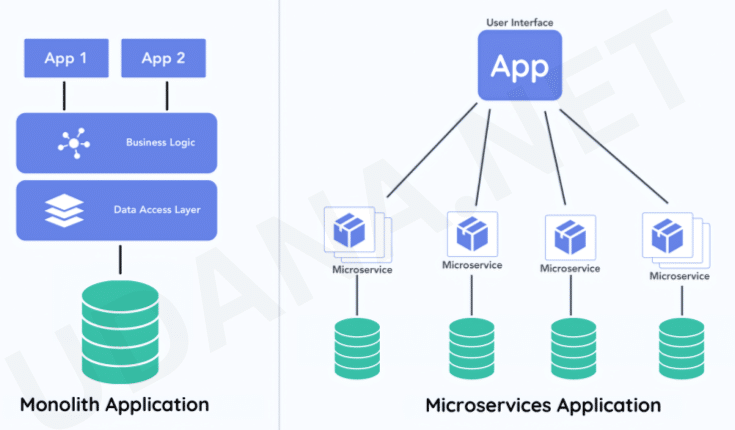

Monolithic: This technique combines all of the components of a software system into a single, closely integrated application. While this strategy allows you to quickly and effortlessly design and deploy an application from scratch, it becomes challenging to maintain and expand as the system grows and requires new features.

Service Oriented Architecture (SOA) is an architectural paradigm that splits a system into smaller, loosely coupled services that may be developed, deployed, and maintained independently. This technique improves maintainability and scalability, although it may still face resource management and deployment issues.

Microservices architecture is a variant of SOA that breaks a system into small, self-contained services that may be created, deployed, and scaled independently. It utilizes containerization to enhance resource management, deployment, and service isolation.

Many organizations are adopting microservices architecture, where containers are ideal for this approach, as each microservice can run in its container, isolated from others.This modularity increases flexibility by allowing teams to create, launch, and grow specific services separately. Container orchestration technologies make it easier to manage these microservices and ensure that they communicate and coordinate seamlessly.

◾Continuous Integration/Continuous Deployment (CI/CD)

Containerization complements DevOps methods that prioritize collaboration, automation, and continuous integration/continuous deployment (CI/CD). Containers make automated testing and deployment pipelines easier, allowing developers to push code changes more frequently and consistently. By combining containerization and CI/CD solutions, teams can automate the build, test, and deployment processes, decreasing manual involvement and increasing overall efficiency.

◾Improved Collaboration

When working on a team or open-source project, developing inside containers can ensure that everyone is working with the same environment, reducing compatibility issues and making it easier to collaborate. Containers can be version-controlled alongside your application’s source code. This allows you to track changes to the development environment over time, making it easier to roll back to previous configurations or collaborate with team members effectively.

◾Cost Effectiveness

Containerization gives significant cost reductions to enterprises. Containers are significantly lighter than regular virtual machines. This implies they start up significantly faster than VMs and consume fewer resources while running. This minimizes the expenses associated with resource provisioning in cloud settings because fewer resources are required to power the containers. Furthermore, because containers are so lightweight, they may operate more on a single server than virtual machines, increasing cost savings.

Container Orchestration: Managing Containers at Scale ⚖️

Container orchestration is a technique for automating the deployment, management, and scalability of containerized applications. It simplifies the difficult work of managing huge numbers of containers. Container orchestrators, such as Kubernetes, ensure that containers work together seamlessly across multiple servers and environments. Orchestrators offer a framework for managing container lifecycles, enabling service discovery, and ensuring high availability. This framework is essential for microservice architectures, in which cloud-native applications are made up of many interdependent components.

DevOps teams may use container orchestration to streamline provisioning, resource allocation, and scaling, allowing them to fully realize the benefits of containerization and connect it with their business goals.

Container orchestration automates the deployment, management, and scaling of containerized applications. Enterprises leverage orchestrators to control and coordinate massive numbers of containers, ensuring they interact efficiently across different servers.

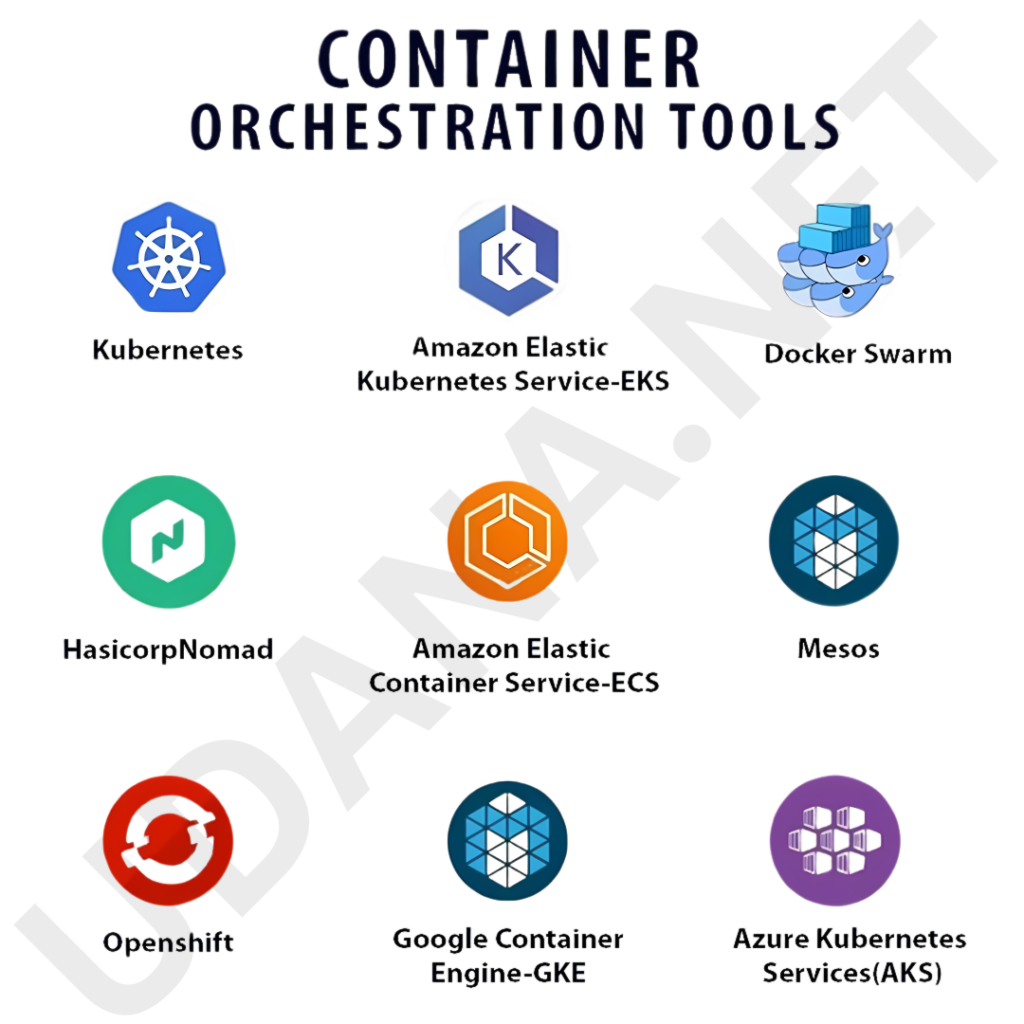

Orchestration Tools

Orchestration technologies provide a framework for managing container workloads, allowing DevOps teams to manage containers’ lifecycles. These technologies, also known as orchestration engines, enable enhanced networking capabilities by speeding container and microservice deployment while dynamically modifying resources to meet demand. DevOps teams can use orchestrators to realize the full potential of containerization while aligning it with their business objectives.

Popular Container Orchestration Engines

- Kubernetes® (K8s)

- Rancher

- SUSE Rancher

- Amazon Elastic Kubernetes Services (EKS)

- Azure Kubernetes Services (AKS)

- Google Kubernetes Engine (GKE) / Anthos

- Red Hat OpenShift Container Platform (OCP)

- Mesos

- Cisco Container Platform (CCP)

- Oracle Container Engine for Kubernetes (OKE)

- Ericsson Container Cloud (ECC)

- Docker Swarm

- HashiCorp Nomad

Challenges and limitations ⚓

Containers provide various advantages, but they also have their own set of issues and constraints. Let’s discus some of the major challenges and limits associated with containerization.

Security risks: Because containers share the host OS kernel, they may be subject to kernel-related vulnerabilities. Furthermore, security risks might arise from incorrectly configured containers or the use of obsolete images. To reduce potential threats, companies must follow security best practices such as using minimal base images, updating images on a regular basis, and implementing suitable access controls.

Inherent complexity: The introduction of containerization introduces new levels of abstraction and managerial complexity. Organizations are responsible for not just the containers themselves, but also the underlying infrastructure, orchestration, and monitoring tools. This complexity may increase the learning curve for developers and operations teams, needing additional training and experience.

Networking hurdles: Containers may require complex networking setups to enable communication between services, particularly in a microservices design. Managing container networking can be difficult, requiring additional tools and knowledge to ensure effective and secure communication between services.

Storage constraints: Because containers are fundamentally stateless and transitory, any data saved within them is not durable by default. Managing persistent storage for stateful applications may be difficult and sometimes necessitates the use of extra tools and services, such as volumes or external storage solutions.

Resource isolation: While containers provide some isolation, they do not provide as much as virtual machines do. This could result in performance issues or resource congestion among containers, particularly in high-density deployments. Some container runtimes, such as Kubernetes, incorporate resource management features such as quotas and limits to help ease this constraint.

Real-World Examples of Containerization 🌐

Everything at Google, one of the ‘Big Five’ tech firms, runs in containers. Containerization enables Google’s development teams to deploy software efficiently and operate at new scales. It’s no surprise that Google created Kubernetes, today’s most popular container orchestration software. The power that containers have over tech superpowers is impressive. Let us consider a few examples.

Pinterest evolved its platform towards containerized technology to resolve the operational issues and manage the increasing workload. Pinterest initially shifted its services to Docker to ensure an immutable infrastructure and free up engineering work spent on Puppet. After completing the initial step of containerizing the services, they intended to use container orchestration and create a multi-tenant cluster with a uniform interface for long-running services and batch operations.

eBay‘s development teams employed Docker containers for development and testing, and Kubernetes to support Docker. Moreover, the firm began running production apps in containers. Now, eBay’s integration architecture is totally container-dependent. The eBay system contains approximately 1000 microservices, which are currently operational and evolving in response to user needs.

Docker Enterprise Edition supports Visa‘s aim of providing electronic payments to everyone, everywhere, and making global economies safer by digitizing currency. After going live with the Docker Containers-as-a-Service architecture, they observed a 10x boost in scalability. Visa wanted new engineers to be able to deploy code on the first day, which Docker enabled.

Netflix uses containerization to manage its large-scale microservices architecture. Containers facilitate rapid deployment, scaling, and consistency across its global infrastructure.

Spotify employs containerization to streamline its microservices deployment. Containers help in managing and scaling its services efficiently, ensuring consistent performance across its platform.

The Future of Containerization 🌱

As the world of coding and app development shifts towards containerization and the use of microservices, we must overcome a few challenges. Working through them will be critical for making the most of these strategies and staying ahead in the rapidly changing world of software development.

As applications are broken down into smaller, more focused services, the total system complexity increases. We need to discover efficient solutions to deal with this complexity, especially when it comes to service dependencies and communication. Standardized processes, tools, and frameworks can help manage this complexity, but we must be willing to constantly learn and adapt in order to keep up.

As the number of containers and microservices grows, so do the potential attack vectors for applications. It is critical to maintain the security of these settings, which necessitates a comprehensive approach including all stages of software development. This includes putting strong security procedures in place, such as adequate network and container isolation, secure communication, and vulnerability management.

Although containerization and microservices naturally promote scalability, managing this scaling can become difficult as applications grow in size. We must deliberate on how to efficiently scale these environments, which involves maximizing resources, load balancing, and automated scaling. To succeed, a thorough understanding of technologies and infrastructure, such as Kubernetes, is necessary, as well as regular monitoring and improvement.

Many businesses still have legacy apps or systems that cannot be easily migrated to a containerized and microservices-based architecture. Finding ways to link these systems with newer services and gradually transition them to a microservices architecture presents a substantial challenge.

The rapid advancements in containerization and microservices have resulted in a skills shortage. Companies must provide training and education to their employees to guarantee that they have the necessary abilities to use these technologies efficiently. Creating a culture of continual learning and teamwork will be critical for staying current with the newest trends and practices.

Containerization and microservices might lead to performance concerns due to greater abstraction and inter-service communication. We must concentrate on optimizing performance, taking into account issues such as resource allocation, caching, and effective communication protocols.

Takeaways 🛫

In summary, containerization provides considerable benefits for controlling applications in distributed systems, but it also creates issues that must be carefully considered and managed. Organizations may effectively exploit the power of containerization in dispersed environments by using container orchestration technologies, following best practices in design and implementation, and resolving security concerns.

FAQ 💡

What is containerization in software development?

Containerization is a lightweight, portable method of packaging and running applications along with their dependencies in isolated environments called containers, ensuring consistency across different computing environments.

How do containers revolutionize software development?

Containers streamline development by enabling consistent environments, faster deployment, scalability, and efficient resource utilization, making it easier to build, test, and deploy applications.

What are the key benefits of using containers?

Containers offer benefits like portability, faster deployment, improved scalability, resource efficiency, and consistent performance across development, testing, and production environments.

How do containers differ from virtual machines (VMs)?

Unlike VMs, which virtualize an entire operating system, containers share the host OS kernel, making them lighter, faster to start, and more resource-efficient.

What is Docker, and how does it relate to containerization?

Docker is a popular platform for developing, shipping, and running containers. It simplifies container management and has become synonymous with modern containerization practices.

What are some common use cases for containerization?

Containers are widely used for microservices architecture, continuous integration/continuous deployment (CI/CD), cloud-native applications, and DevOps practices.

How does containerization improve DevOps workflows?

Containers enhance DevOps by providing consistent environments, enabling faster deployments, and facilitating collaboration between development and operations teams.

What is Kubernetes, and why is it important for containerization?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications, making it essential for large-scale container environments.

What challenges come with containerization?

While containers offer many advantages, challenges include managing container security, networking complexities, and ensuring proper monitoring and logging in dynamic environments.

What is the future of containerization in software development?

The future of containerization includes advancements in orchestration tools, improved security features, and deeper integration with cloud-native technologies, further transforming how applications are built and deployed.