Table of Contents

At a time when my parents were most thinking about too much television ‘ruining my brain’, video streaming was a convenient alternative to traditional media. Yet these days, video streaming is central to our daily lives. With the way we watch video shifting dramatically, whether you’re watching your favorite series, catching a live sports event or streaming educational content, the ‘viewers eyes’ have never anything in common with what they see.

In a time when Netflix, YouTube and Twitch are all fully part of the global cultural fabric, it’s almost impossible now to imagine streaming at anything other than a niche activity. Video streaming technology has revolutionized the way entertainment is experienced, but it hasn’t been just entertainment; it has completely redefined how businesses, educators, and creators connect with their audience. The question is: what has happened, and where are we going?

The Early Days of Video Streaming 📹

Live streaming is really popular these days. Live video streaming has become an essential part of our lives, from seeing your favorite musician perform live to following the greatest sporting events in real time. But are you aware that the notion of live video streaming is not new? It’s been around since the early 1990s!

The first public demonstration of live video streaming via the Internet occurred in 1993 at Xerox PARC in Palo Alto, California, during a concert by the band Severe Tire Damage.

In 1995, ESPN and MLB agreed to broadcast a baseball game live over the internet. And it was a game between the Seattle Mariners and the New York Yankees. Considering this event was a major experiment because it meant it was one of the first live sporting event streamed via the internet. In 1995, ESPN and MLB agreed to broadcast a baseball game live over the internet. And it was a game between the Seattle Mariners and the New York Yankees. Considering this event was a major experiment because it meant it was one of the first live sporting event streamed via the internet.

George Washington University hosted an interactive webcast on October 4, 1999, in which President Bill Clinton participated to talk about technology policy and the digital economy. It was a historic event in the history of online broadcasting since it was the first appearance of a sitting United States president in a live, internet-based webcast.

Have a look at how news covered the event below.

The early 2000s witnessed substantial developments in internet technology, including higher broadband speeds and widespread DSL usage, which greatly enhanced the live video streaming experience. This period also saw the debut of services that would go on to become industry giants in live video streaming.

Chad Hurley, Steve Chen and Jawed Karim founded YouTube in February 2005, former employees of PayPal. The platform was made as a way where people could upload, share and view videos easily without having a user friendly platform to share videos. YouTube at first relied on Adobe Flash Player to stream videos and was widely used to play other multimedia content. When YouTube was acquired by Google in 2006 for $1.65 billion worth of stock, it became a cash flooded operating company that helped bring on the growth that people would later experience.

Back in 1997 Netflix was founded as a mail based DVD service. In 2007 Netflix pivoted from burning the discs to video streaming, introducing its instant streaming service, which allows users to view movies and TV shows without having to physically own the discs. Netflix’s video streaming technology began on Microsoft Silverlight and then moved to HTML5 and adaptive bitrate streaming to better quality and more smooth playback across multiple devices and speeds.

I have mentioned only about few notable events and platforms here. There were several other developments during those decades in regard to video streaming.

Improved Internet Infrastructure 🗼

Bandwidth is vital for determining the quality of streaming services. Sufficient bandwidth is critical for flawless streaming. This refers to the speed at which data may be sent via an internet connection. Higher bandwidth rates provide for smoother playback, faster loading times, and a better overall video streaming experience. In contrast, a restricted or overloaded bandwidth can cause buffering, lagging, and worse visual quality.

To put it simply, bandwidth serves as a channel via which streaming material is sent from the provider to the user’s device. The bigger the pipeline, the more data can pass through it, resulting in a higher quality video streaming experience. Without appropriate bandwidth, the connection gets stressed, resulting in pauses and reduced visual and audio quality. As a result, for consumers who value the quality of their streaming experience, having a dependable and powerful internet connection is critical.

How we get and consume digital content has evolved greatly due to the evolution of internet infrastructure. Because broadband internet was laid, Internet connections of households and businesses got faster and more stable, an opportunity to stream high definition video without any interferences. In modern times the increase in bandwidth and the more and more available broadband allowed the video streaming Internet services such as YouTube, Netflix or Hulu to become accessible to worldwide audience.

It was this shift that enabled transition from traditional TV viewing to on demand, on high definition, wherever and whenever the user wants. Streaming was a new definition in digital entertainment: It meant not being constrained by dial-up or slow speeds.

From there, 4G networks, with their faster and lower latency mobile data speeds, accelerated the growth of streaming; although there is significant complementary overlap with music consumption and listening, in retrospect this growth appears to have been inevitable. With this, users were able to stream videos in HD and 4K quality on all their smartphones, tablets and laptops as long as they were not in a stationary place. This is why 4G made video streaming apps incredibly sophisticated, able to stream even in urban centers of heavy user demand.

The revolution came, however, with the rise of 5G networks. Now, with ultra-fast download speeds, low latency, and the ability to do it with a ton of simultaneous connections, 5G has redefined video streaming with the ability to stream high definition VR/AR, live streaming in real-time with virtually no lag, and crisp 4K and even 8K. With 5G networks to continue to expand, they will bring the change of entertainment, as well as gaming, remote work and digital communication.

Evolution of Video Codecs and Streaming Protocols 📶

The evolution of video codecs and video streaming protocols has played a vital role in shaping the video streaming landscape.

Silverlight and Flash developed as proprietary plugins in the early 2000s, taking streaming technology beyond what HTML could give at the time. They created options to overcome HTML’s restrictions and offered features such as media stream sources and content protection (DRM) to improve the video streaming experience.

Silverlight and Flash were gradually phased out between 2011 and 2012 as HTML5 progressed, providing the APIs needed to implement video streaming protocols such as DASH, HLS, and smooth video streaming within the browser while also including DRM capabilities. HTML5 survived early growth difficulties and emerged as the leading streaming platform. By 2014-2015, HTML5 has advanced enough to handle basic video streaming capabilities as well as DRM-enabled content protection.

Role of Video codecs and it’s evolution ⚙️

A video codec is a technique that is essential for digital video processing, mainly used to compress (encode) and decompress (decode) video data. This compression is essential for lowering file sizes and bandwidth needs, which improves video storage and streaming efficiency. Video codecs use complicated algorithms to reduce unnecessary or redundant material while keeping video quality and obtaining great compression efficiency.

1990s:

In the 1990s, the Motion Picture Experts Group (MPEG) rose to prominence, producing a set of video coding standards that became worldwide popular.

MPEG-1, released in 1993 for use in CD (VCD) players, offers a resolution of 352×240 pixels at 30 frames per second, allowing for greater quality video on digital mediums.

Then came MPEG-2, which was developed for DVD players. It maintained frame rate but increased resolution to 720×480 pixels, improving video quality for home entertainment.

2000s and beyond:

The most significant advancement in recent decades has been the H.264/MPEG-4 AVC codec, which was launched in the mid-2000s and has been the most extensively used video codec since 2016.

H.264/MPEG-4 AVC stands out for its adaptability and efficiency, since it is utilized in a wide range of applications, from video recording to streaming, and provides high-quality video compression appropriate for a variety of platforms and devices.

How Video Codec Works? 🛠️

A codec encompasses the basic data transformation operations for both video and audio, acting as a link between raw media material, transmission over networks, and ultimate consumption. The codec’s journey begins with the original material, which is raw video footage with its original sound. These parts are first handled individually, with the video passing through a video codec and the audio passing via an audio codec.

To keep the audio and video streams in sync, they are compressed and then merged or wrapped into a single video file type. This file uses less bandwidth and space than the original material and is then prepared for storage or transmission across data networks. The procedure is the opposite at the reception end. The video file is decoded and decompressed using the same or a suitable codec, restoring the compressed byte sequence to audio and video streams. In this phase, the codec balances the requirement for effective transmission with the inherent loss from compression by reconstructing the data to a condition as near to the original as feasible.

Playback, the last stage, presents the decompressed audio and video simultaneously to create a smooth viewing experience. The above process illustrates the purpose of the codec in handling the complex balance between preserving quality and accomplishing compression. The results of different codecs range from higher-quality files that are larger and could be more difficult to edit to smaller ones with quite acceptable quality.

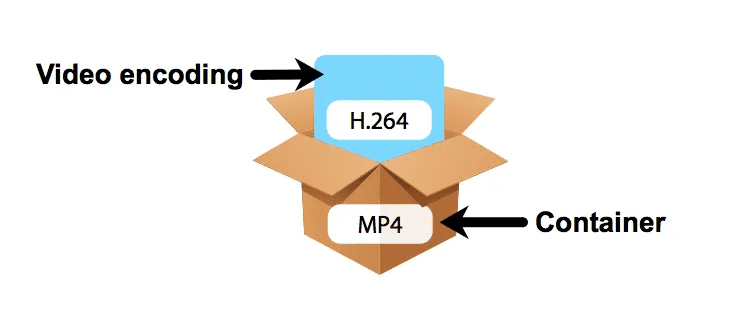

Video Container vs Video Codec 📦

A video container, sometimes referred to as a container format, acts as a “wrapper” or “holder” for different kinds of multimedia data. Video, audio, subtitles, and any other metadata are all contained and arranged into a single file structure by this file format. To ensure that these disparate data streams replay in unison, the container controls how they are linked and stored.

Since the codecs handle the compression and manipulation of the data streams, the video container has no effect on the quality of the audio or video. Rather, the container format specifies how the streams are combined and work with software or playback devices.

Multiple codecs for video and audio streams are supported by a video container, giving users the flexibility to select the most appropriate codecs for their requirements and have them included in a single, manageable package. Because of their versatility, video containers are essential to multimedia distribution because they provide a standardized method of distributing complex media content across a range of platforms and devices.

Here are some of the most popular video containers:

◾ MP4 (MPEG-4)

One of the most widely used video containers, especially for online video streaming, portable devices, and social media. MP4 supports a wide range of video and audio codecs, including H.264 (video) and AAC (audio). It also supports subtitles and metadata. Highly compatible across devices, platforms, and browsers. Great balance between file size and quality. Popular among video streaming platforms. (e.g., YouTube, Vimeo).

◾ MKV (Matroska Video)

A flexible, open-source container that can support virtually any type of video, audio, subtitle, and metadata. MKV is often used for high-quality video files like HD movies and Blu-ray rips. Supports almost all video/audio codecs (e.g., H.264, H.265, VP9, AAC, MP3). Can store multiple subtitle tracks, audio tracks, and chapters. Excellent for high-definition video (HD, 4K) and streaming.

◾ AVI (Audio Video Interleave)

A legacy video container format introduced by Microsoft. AVI files can contain both video and audio data, but it is less efficient than modern formats like MP4 or MKV, and lacks some advanced features. Widely supported on older systems and legacy devices. Larger file sizes due to less efficient compression. Limited support for modern features (e.g., subtitles, advanced metadata).

◾ MOV (QuickTime Movie)

Developed by Apple, MOV is primarily used by QuickTime Player and is also compatible with iOS and macOS devices. It can contain video and audio, as well as interactive content. Good for high-quality video, often used for professional video editing. Widely used in macOS-based systems. Less universal compatibility compared to MP4 or MKV.

Popular Video Formats 🎭

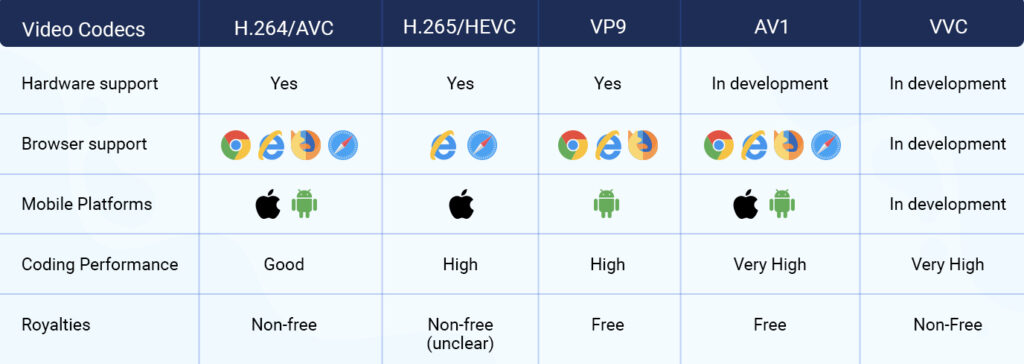

🔸 H.264/AVC (Advanced Video Coding)

Also known as MPEG-4 Part 10, H.264 has been one of the most commonly used formats for video compression. provides high-quality video at comparatively low bit rates, which makes it effective and broadly compatible. Because of its versatility and capability for resolutions up to 8K, it can be found in everything from Blu-Ray discs to online video streaming services.

🔸 H.265/HEVC (High Efficiency Video Coding)

This successor to H.264 doubles the data compression ratio at the same level of video quality or substantially improves video quality at the same bit rate. Increased efficiency makes it suitable for streaming high-quality video over the internet, especially where bandwidth may be limited.

🔸 AV1 (AOMedia Video 1)

Developed by the Alliance for Open Media, AV1 is an open, royalty-free video coding format designed for video transmissions over the internet. Better quality at the same bit rate due to improved compression efficiency than H.264 and H.265, especially useful for 4K and higher resolutions. Strong support across platforms and browsers, including backing from key hardware and software firms.

As a newer codec, it’s still gaining ground in terms of widespread hardware support and may require more processing power for encoding and decoding compared to older codecs.

🔸 H.266/VVC (Versatile Video Coding)

The latest standard in the H.26x series, H.266/VVC, is designed to provide efficient video compression for a wide range of applications from low-end mobile streaming to high-end 8K broadcasting. Offers a notable compression boost over H.265/HEVC, with claims to cut the data needs for streaming high-quality video in half. Its adaptability allows it to handle a wide range of multimedia, including 360-degree video, screen content, and still photos within video streams.

Streaming Protocols 🎏

The video content is delivered over the internet via these protocols, they define how the video content is buffered, played and managed in the player.

- DASH: Dynamic Adaptive Streaming over HTTP (DASH) is an open-source, codec-independent alternative to HLS. It is used to provide adaptive bitrate streaming, which modifies the quality of the video according to network conditions.

- HLS: Apple created HLS (HTTP Live Streaming), an HTTP-based technology that divides videos into manageable chunks (usually 2–10 seconds) and sends them over regular HTTP connections. Both live and on-demand streaming make extensive use of it.

- WebRTC: A protocol designed for low-latency streaming of real-time communication, such as video calls and live interactive streaming.

📌 Adaptive Bitrate Streaming

This technique is essential for ensuring continuous video playback in a variety of network scenarios. It involves encoding the same video at several bitrates (e.g., 1Mbps, 3Mbps, 300Kbps, etc.) and dynamically modifying the video quality by altering the bitrates according to the network speed at the time.

Adaptive bitrate streaming is compatible with both DASH and HLS.

📌 Transcoding and Transmuxing

Transcoding: The method of changing the resolution or format of video content. Delivering information in several formats and resolutions (720p, 1080p, and 4K) to accommodate different devices and internet speeds is essential.

Transmuxing: Converting a video’s container type (from.mp4 to .mkv, for example) without affecting the video or audio codec itself. Compatibility with many platforms or devices is ensured by its utilization.

To deliver heavy weight video content around the globe CDN (Content Delivery Networks) plays a crucial role as well.

Video streaming technology revolution has changed the way we consume the content and how we interact with the world around us. Streaming has fundamentally disrupted traditional media industries by making high quality video on demand that is now have global reach for entertainment, education, and information. The evolution has also enabled people to be a content creators, given that production and distribution of the media can be done by everyone.

It has altered the social cloth and provided room for virtual communities. Whatever form it takes, the impact on society is enormous: redistributing cultural consumption, changing social behavior, changing how we learn, work, and communicate. Future of video streaming will continue to alter social norms; suggesting new opportunities and difficulties in a fast growing digital world.

Future of Video Streaming 🌱

In conclusion, the evolution of video streaming has been nothing short of revolutionary, transforming how we consume content and connect with the world. From the early days of buffering videos to today’s seamless 4K and even 8K streaming, the journey has been fueled by advancements in compression, cloud computing, and AI. But what’s next? The future of video streaming looks even more exciting, with technologies like 5G, augmented reality (AR), and virtual reality (VR) poised to take center stage.

Imagine immersive experiences where you’re not just watching a concert or a sports game but feeling like you’re right there in the action. As bandwidth improves and AI personalizes content like never before, the line between creator and viewer will blur even further. So, buckle up—streaming is about to get a whole lot more interactive, immersive, and, frankly, mind-blowing. The best part? We’re just getting started.

FAQ 💡

What is the history of video streaming, and how has it evolved over time?

Video streaming has come a long way since its inception in the 1990s. From early technologies like RealPlayer to the rise of platforms like YouTube and Netflix, the evolution has been driven by advancements in internet speed, compression algorithms, and cloud computing.

What are the key technologies behind modern video streaming?

Modern video streaming relies on technologies such as adaptive bitrate streaming (ABR), content delivery networks (CDNs), video compression standards like H.264 and H.265, and cloud-based infrastructure to deliver high-quality, buffer-free content.

How has AI impacted the video streaming industry?

AI has revolutionized video streaming by enabling personalized recommendations, optimizing video compression, improving content discovery, and even enhancing video quality through upscaling and real-time adjustments.

What role does 5G play in the future of video streaming?

5G technology is set to transform video streaming by providing faster speeds, lower latency, and greater bandwidth. This will enable higher-quality streaming, support for AR/VR experiences, and seamless streaming on mobile devices.

What are the challenges facing the video streaming industry today?

Challenges include bandwidth limitations, content piracy, the need for better compression technologies, and ensuring accessibility for users in regions with poor internet infrastructure.

How will AR and VR shape the future of video streaming?

Augmented reality (AR) and virtual reality (VR) are expected to create immersive streaming experiences, allowing users to interact with content in new ways, such as virtual concerts, live sports, and interactive storytelling.

What trends can we expect in video streaming over the next decade?

Future trends include the rise of 8K streaming, increased adoption of AI-driven personalization, growth in live streaming, and the integration of blockchain for secure content distribution and anti-piracy measures.