Table of Contents

A Short History of Computing 🧬

This is known as the ‘pen and paper’ era. It witnessed the construction of the foundation. The idea of numbers became solid. The zero was created by Brahmagupta or Aryabhata, depending on how you look at it. The numbering systems evolved. The Abacus, the earliest known tool for computation, is thought to have been invented around 2400 BC.

The concept of employing digital circuits for computing, which led to contemporary computers, was first recorded around 1931. Alan Turing modelled computation, resulting in the well-known Turing Machine. The ENIAC, the first electronic general-purpose computer, was introduced to the public in 1946.

The first computer was designed as a machine with cogs and gears, but it wasn’t viable until the 1950s and 1960s, when semiconductors were invented. In the 1970s, IBM emerged as the computing market leader. In the 1980s, however, software became increasingly vital, and by the 1990s, a software company called Microsoft had become the computer frontline leader by providing regular people with capabilities such as word processing.

During the 1990s, computing got more personal, until the World Wide Web transformed Internet URLs into human-readable web site names. Google then provided the ultimate personal service, free access to the massive public library known as the Internet, and it quickly became the new computing leader. In the 2000s, computing developed once further, becoming both a social medium and a personal instrument.

Classical Computing: The Traditional Approach 🖥️

◾Classical computing principles

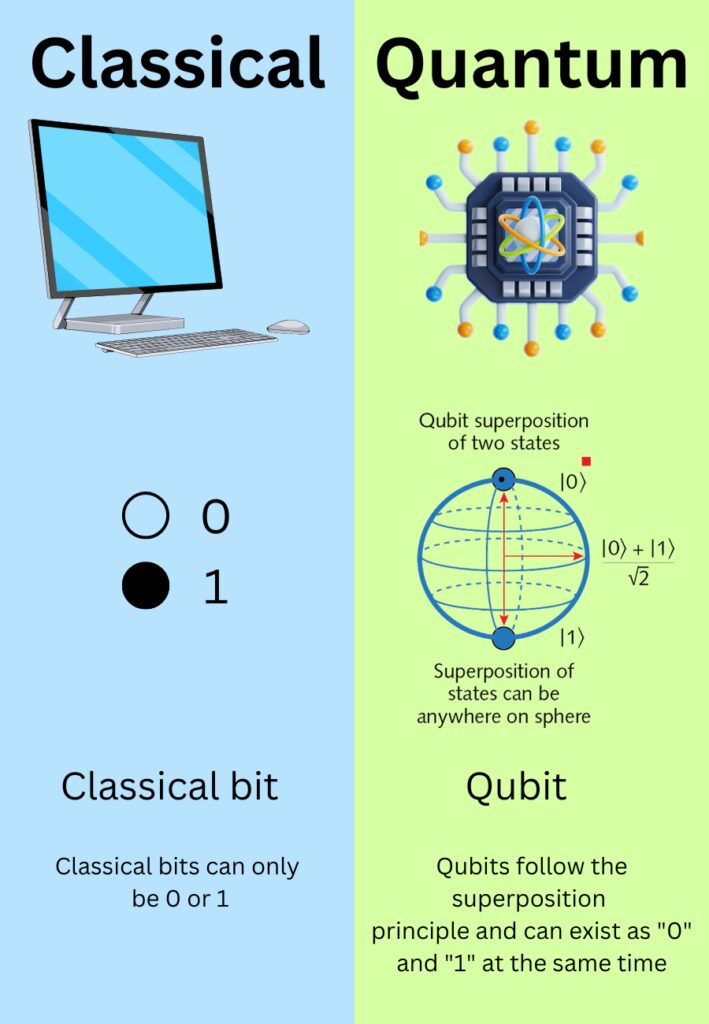

Classical computing is founded on our ability to manipulate binary numbers, or bits, which can only be, much to our relief, zero or one. The information is bit (which handles one piece of data) and is important because a computer uses bits to represent information. For example a single bit may indicate that a response to a query is a yes/no or that it is a true/false statement.

Logic gates are used to do bit manipulation whereby, electronic circuits able to perform logical operations between input bits to give an output bits. AND, OR, NOT, XOR are all different types of logic gates. And these gates can be joined together, to make more complicated circuits that can do some more complex work.

Next to the logic gates, the classical computing is a concept based on the algorithm, i.e a set of instructions about how to complete a certain task. The algorithms could be simple, like summing two numbers, or much more complex, such as sorting very large data set. Computer is different from other machines as they could build and execute algorithms.

◾Some Applications of Classical Computing

Classical computing offers a wide range of applications, including scientific research and engineering, as well as business and entertainment. Here are some instances of how classical computing is utilized today:

Scientific Research: Classical computing is widely employed in scientific research, particularly to model complicated phenomena and mimic experiments. For example, scientists utilize classical computers to mimic the behavior of particles in a nuclear reactor or the impacts of climate change.

Engineering: Engineers utilize classical computers to create and test new products and systems. Engineers utilize computer-aided design (CAD) software to generate 3D models of items and systems, which may then be evaluated and optimized through simulation. This enables developers to test and iterate designs more rapidly and efficiently than traditional approaches.

Business: Classical computing is also widely employed in business, for tasks like as data analysis, financial modelling, and supply chain optimization. Businesses, for example, utilize traditional computers to analyses consumer data and predict trends, to undertake financial analysis and forecasts, and to optimize manufacturing and distribution workflows.

Entertainment: Classical computing is widely utilized in the entertainment industry to produce special effects, generate 3D animations, and build video games. For example, video game creators employ traditional computers to design and test game engines, which are the fundamental technologies that power video games.

◾Some Limitations of Classical Computing

Classical computing has many uses, but not without its boundaries. One of the greatest limits of classical computing is scalability. As the number of bits in a computer system increases, so does its complexity and design and maintenance is a more difficult thing.

A second disadvantage of classical computing is the inability of classical computing to handle certain types of problems at reasonable expense. Take as an example classical computers: they are not suited to massive dataset searches or complex optimization problems. Methods based on quantum mechanics concepts are better suited to solve these challenges, which are based on quantum computing.

Classical computing, however, is finally constrained by its energy usage. Traditional computer systems have become more complicated and powerful, but they also use more and more energy to work. The costliness of energy, fueled by the power required to run large scale computing systems, has led people to worry whether computing has an environmental effect.

Quantum Computing: The Next Frontier 👉

🔸Brief overview of quantum mechanics in computing

Computer industry could see a quantum change as we progress along the technology curve. The computer systems we have today are based in Classical Physics and use bits that can only be 0 or 1. Unlike classical computing, however, quantum computing leverages quantum physics principles and quantum bits (qubits) that operate in multiple states at the same time.

Some problems are too complicated for conventional computers to handle lacking the ability for quantum computers to handle some computations tenfold faster than a classical computer, making it an ideal use case for quantum computers. Finally, we’ll look at the fundamentals of quantum computing and what it would look like in the application areas of consumer electronics, healthcare, finance and more.

🔸A Brief History of Quantum Computing

The history of quantum computing dates to the early 20th century when quantum mechanics was developed. The idea of using quantum physics to do calculations was proposed during the 1970s and 1982, physicist Richard Feynman first suggested building a quantum computer. Only in 1994 did mathematician Peter Shor discover an algorithm capable of factoring large numbers on a quantum computer, which had been considered difficult.

NMR(Nuclear magnetic resonance) was used in 1995 for the first physical implementation of a qubit. Since then, other qubit implementations employing superconducting circuits, trapped ions and other technologies have been developed in the following years. Quantum computing is a rapidly emerging field of today, and can be used to bring change into most areas. Nevertheless, hardware and manufacturing constraints, error correction and ethical considerations pose yet other challenges to the mainstreaming of quantum computing.

🔸The Fundamentals of Quantum Computing

The branch of physics concerned with the behaviour of matter and energy on a very small scale, for example, at an atomic or subatomic level. It goes on to describe how particles can be in states at the same time (is a superposition). On the contrary, this method of operating is opposed to trying to view the behavior of macroscopic objects under the classical mechanics,

In quantum computing the qubit is the basic unit of information. A qubit can be in 0, 1 or a superposition between them. It means that if we are in superposition state, then qubit almost exist in a combination of both 0 and 1 at the same time. It’s sort of like flipping a coin, and getting heads on both sides at the same time.

Quantum Gates and Quantum Circuits

Like conventional computers, quantum computers to perform operations on qubits use quantum gates. These gates enable us to manipulate a qubit superposition state and perform quantum parallel operation.

Links of quantum gates together make quantum circuits. The circuit sequence and gate type used in the circuit ultimately determines the result of the computation. They conduct quantum computing specific processes like entanglement, superposition, and interference, such as quantum circuits.

Quantum Entanglement and Teleportation

Entanglement is a quantum physics thing that happens when two particles are coupled so their states become correlated. This means that if you change one particle, the other will change no matter how far apart you are from each other. Entanglement is the most mysterious part of quantum physics, and it is necessary to the quantum computer.

Quantum teleportation is a technique of transferring a qubits state from one point to another while not physically transferring the qubit. Entangled particles are the idea behind this approach to the idea that the particles are in a correlated state, and may be used to communicate securely.

🔸Applications of Quantum Computing

Cryptography and Security

Quantum computers will impact security and cryptography. Standard encryption is based on the computational challenges of factoring massive numbers; however, quantum computers can factor such massive numbers exponentially quicker than classical computers. However, once quantum computers become powerful enough, present encryption approaches will fail.

In turn, quantum computing enables us to create novel encryption schemes that are resistant to both classical and quantum computer attackers. Quantum resistant encryption, quantum digital signature, and quantum key distribution are all components of these techniques.

Machine Learning and Artificial Intelligence

It can also improve machine learning and artificial intelligence by allowing for quicker data processing and better algorithms. Classical computers are insufficient to uncover patterns in data that present equipment cannot detect, therefore quantum machine learning methods have a significant potential to improve technologies such as image and audio recognition, natural language processing, and robotics.

Optimization and Simulation

In addition, quantum computing can solve complex optimization and simulation problems that cannot be solved by classical computers. We apply these problems to different areas, including finance, chemistry and material science.

That’s because quantum computers are able to perform operations on multiple states at once, and the resultant explorations of a vast number of solutions can be completed much more quickly than with classical computers. It can then result in fantastic improvements in our ability to model such things, for example, drug discovery, financial modeling, and climate modeling.

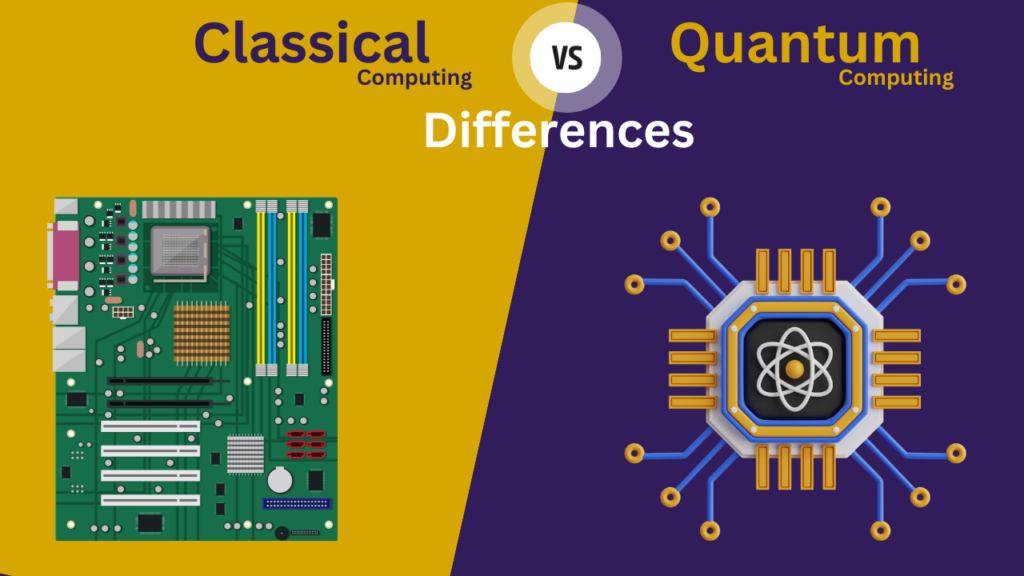

Key Differences Between Classical and Quantum Computing 🎭

🔹Data Representation

Units of data: Bits and bytes vs qubits

Classical Computing:

Traditional or classical computers’ basic unit of data consists of bits — eight bits make up what is called one byte. Computers write code as either a 1 or 0, called binary. In short, these 1s and 0s represent on or off state. Additionally, they can be true or false or yes or no.

This is also called serial processing, which is in succession (one after another) one operation at a time. Parallel processing is an extension of classical processing and is used in lots of computing systems; they perform simultaneous computing tasks. Bits of 1s or 0s are repeatable by nature of being binary, and so classical computers also only give you one result.

Quantum Computing:

A qubit is a quantum property called superposition of the stored data of a quantum computer. While data bits on a classical computer exist either as a zero or as a 1, a qubit is a zero, 1, or both at the same time. Due to their ability to be both in uncertain states, qubits can do several computations at once to speeds that classical computers can’t do practically.

This means we can solve many real world problems that a classical computer may have to take years – if not centuries – to solve. That is why experts predict dozens of future applications for quantum computing.

🔹Processing Capability

Classical: Sequential and deterministic

Deterministic: In other words, classical computing runs deterministic algorithms, then same input always yields the same output.

Sequential Processing: The instruction are executed sequentially, which can hinder speed on some kinds of problems.

Quantum: Parallel and probabilistic (exploiting superposition)

Probabilistic: Unlike other computing methods, quantum computing gives probabilistic results; instead of giving us the right answers all the time, it can generate many possible solutions. The principles of superposition and entanglement are responsible for this.

Parallel Processing: A quantum computer can perform multiple calculations at the same time — a big help in solving certain types of problems.

🔹Capabilities and Performance

Classical Computing:

Maturity and Reliability: There are classical technologies for computing that are well understood, well tested and widely used. All modern digital technology runs on it.

Versatility: Although classical computers are incredibly versatile, offering complete flexibility across the whole range of potential applications from word processing and gaming, to web browsing, to the most complex scientific simulations.

Predictability and Accuracy: Determination nature is important because it implies that you will get consistent, reliable, accurate results, which is something many applications where high precision is required need.

Quantum Computing:

Speed and Efficiency: Classical computers can’t solve certain problems as efficiently, as quantum computers can. For example, Shor’s algorithm factors large numbers exponentially faster than the best known classical algorithm.

Complex Problem Solving: But quantum computers shine at tackling massive problems, with massive amounts of data and lots of variables. These encompass materials science applications, drug discovery applications, optimisation applications, and artificial intelligence applications.

Exponential Growth Potential: The amount of qubits in a quantum computer is increasing, and so is the computational power – exponentially – unlocking the door to problems that are impossible to solve today.

🔹Challenges and Future Directions

Classical Computing:

Scalability Issues: Continuing to scale classical computers beyond that point is becoming harder and harder as the physical and practical limits of classical computers are approached.

Efficiency for Certain Problems: It struggles with problems of a certain type, like simulating quantum systems or solving complicated optimization problems, for instance, that simply take so much computing power.

Quantum Computing:

Fragility and Error Rates: Quantum systems are infinitely affected by the environment. Because qubits are hard to keep coherent, you still have very high error rates. Error correction development is still an ongoing challenge.

Technological and Practical Challenges: It’s complicated and expensive to build and maintain a quantum computer. However, today’s quantum computers are still in their early stages of developing, and are not ready for practical, large scale use.

Limited Algorithms: Although quantum computing is very powerful, the amount of quantum algorithms that can actually be utilized is small. But there are numerous other algorithms that researchers are busy inventing in order to make the most of quantum computers.

Summery 🥅

The 10 primary differences between quantum and classical computers.

- Information in a Quantum Computer is Stored in Qubits

Operations using Classical computers depend on bits. 0 or 1 can be assigned as value for bits. But quantum computers, unlike classical computers, can represent a combination of 0 and 1, thus making them so fast. - Quantum Computers Operate by Means of Quantum Mechanics

The kind of computers that require quantum mechanics, which happens at the level of atoms. Traditional computers work with classical physics. - The Power of Quantum Computers Increases Exponentially With Qubits

Qubits can store both 0 and 1 at the same time, the power of quantum computers rises exponentially as we add qubits. Compare to that, the classical computers increase linearly to the amount of transistors. - Quantum Computing Operations Rely on Linear Algebra

Since we have 0 or 1, bits work in accordance with Boolean algebra. In contrast, with linear algebra [and] matrices, quantum computing defines operations and qubit states. - Programs in Quantum Computing are Probabilistic

In a quantum program, each given output has an associated probability. Deterministically classical programs produce 0 or 1. - Quantum Computing Operations Must be Reversible

Reversibility is an important property of quantum circuits: reversed means that we (essentially) can recover the input state of a given operation from its output. However, most classical circuits aren’t reversible but they can be. - Quantum Computers Have Data Restrictions

The no-cloning theorem states that it is impossible to make a copy of an arbitrary unknown quantum state. That severely inhibits the ability of quantum computers to copy data, something which classical computers enjoy immunity from. - Quantum Computers are Better at Data-Heavy Tasks

It’s doubtful that we’ll see quantum computers replace classical computers any time soon in full. Quantum computers take a lot of upkeep and are expensive, which makes not doing the same job that classical computers could do a lot more efficiently unreasonable. For now, quantum computing is reserved for only the most challenging types of applications, like running deep learning algorithms or drug development. - Quantum Computers Can’t Operate at Room Temperature

But to work properly, quantum computers have to be kept close to absolute zero – anything warmer than that and qubits start to break down and lose information. This is one of the key reasons that make it hard to operate a quantum computer. - Quantum Computers Aren’t Easily Scalable

Classical computing technology is basically something we can scale pretty much at will. This scalability means that we now have virtually infinitely scalable compute resources for anyone at their disposal via cloud platforms. The development of quantum computers is still in its infancy, and qubits are themselves difficult to scale – they are very sensitive to their environment, making scaling a very difficult matter.

Conclusion 🎯

While classical and quantum computing have very different strengths and weaknesses. Despite all of that, classical computing is here to stay, and is something that is absolutely imperative for everyday applications and things that require precision and do need to be reliable. Quantum computing, however, holds the promise to revolutionize those fields that demand a lot of computational power, and could also take advantage of the probabilistic and parallel aspects of computation.

As we see research and development in quantum computing continue forward, we can expect much more of business quantum systems integrating with traditional infrastructure, and even a new era of ‘hybrid’ computing may be on the horizon. But when they put together, this synergy could present countless novel opportunities and solve some of the biggest problems that face humanity today, as they currently stand.

Over the coming years, it is possible that the arena of computing will be defined largely by our ability to combine the power of both classical and quantum computing, advancing the computing frontier in many different disciplines.

FAQ 💡

What is the main difference between quantum computing and classical computing?

Quantum computing uses qubits and quantum mechanics principles like superposition and entanglement, while classical computing relies on binary bits (0s and 1s) for processing information.

How does quantum computing achieve faster processing speeds than classical computing?

Quantum computers leverage qubits that can exist in multiple states simultaneously, enabling them to perform complex calculations exponentially faster than classical computers for specific tasks.

Can quantum computing replace classical computing?

No, quantum computing is not expected to replace classical computing entirely. Instead, it complements classical systems by solving specific problems that are currently infeasible for classical computers.

What are the practical applications of quantum computing?

Quantum computing has potential applications in cryptography, drug discovery, optimization problems, artificial intelligence, and simulating complex quantum systems.

What are the limitations of quantum computing compared to classical computing?

Quantum computers are highly sensitive to environmental interference, require extremely low temperatures to operate, and are currently limited in scalability and error correction.

Why is classical computing still widely used despite the rise of quantum computing?

Classical computing is reliable, cost-effective, and well-suited for everyday tasks, while quantum computing is still in its experimental stages and not yet practical for general use.

How do qubits differ from classical bits?

Classical bits can only be in one state at a time (0 or 1), while qubits can exist in a superposition of both states simultaneously, enabling parallel processing.

What industries will benefit the most from quantum computing?

Industries like healthcare, finance, logistics, cybersecurity, and materials science are expected to benefit significantly from quantum computing advancements.